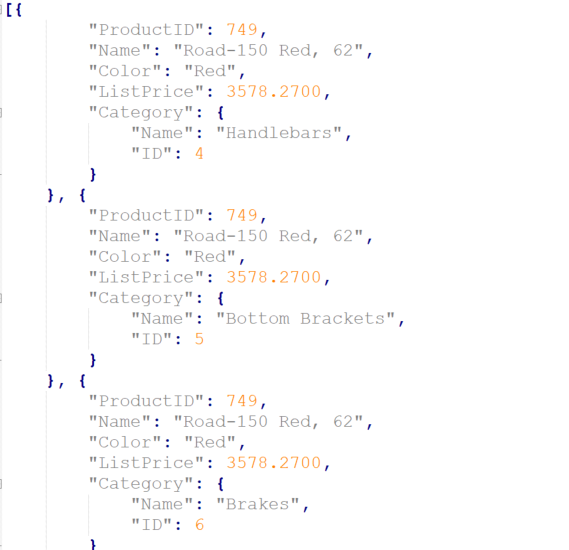

However, Postgres's row length limit is pretty low. This is a general database problem and not a Postgres problem: MySQL and others have their own performance cliffs for large values. This should reduce the performance penalty for compressed values. I didn't test it, but others have found it to use a bit more space but be signficantly faster ( 1, 2). If you are using Postgres 14 or later, you should use LZ4 compression. In cases where you need excellent query performance, you may want to consider trying to split large JSON values across multiple rows. Compressed values makes queries take about 2× more time, and queries for values stored in external TOAST tables take about 5× more time. Never use JSON because the performance is terrible. Queries on HSTORE values are slightly faster (~10-20%), so if performance is critical and string key/value pairs are sufficient, it is worth considering. Accessing JSONB values is about 2× slower than accessing a BYTEA column. It stores parsed JSON in a binary format, so queries are efficient. Most applications should use JSONB for schemaless data.

My conclusion is that you should expect a 2-10× slower queries once a row gets larger than Postgres's 2 kiB limit. This article contains some quick-and-dirty benchmark results to explore how Postgres's performance changes for the "schemaless" data types when they become large. The same performance cliff applies to any variable-length types, like TEXT and BYTEA. Unfortunately, the performance of queries of all three gets substantially slower (2-10×) for values larger than about 2 kiB, due to how Postgres stores long variable-length data ( TOAST). Postgres supports three types for "schemaless" data: JSON (added in 9.2), JSONB (added in 9.4), and HSTORE (added in 8.2 as an extension). Equivalent to FETCH NEXT FROM M圜ursor INTO. (VistaMember),(ResponseHTTPStatusCode),(ResponseHeaders),(ResponseBody),(LogCreated) IntoĪLTER TABLE #MyTempTable ADD OriginalID INT Select IDENTITY(int, 1, 1) AS ,(sessionid),(AbsolutePath),(query),(RequestHeaders),(RequestBody),(PartnerId), Thanks for the help so farĬan't get it to work tho as doing something lie this will complain about cannot insert identity Insert into an unknown table using select Try commenting out the LIKE as well, and seeing how much that improves things - although that's only good just-for-comparison of the baseline, of course. There is more to the tuning process than this, but they are my first "basic" steps to getting a handle on how things might be improved. a column which is NOT in the Clustered Index) I would investigate why that is.Īlso look at which tables/indexes use a SCAN rather than a SEEK For example, if the Clustered Index is used when there is a dedicated index on the main column in your query (i.e. In the SHOWPLAN I would look for which index is used for each table(s) in the query, and if that seems like the best choice. I would do the statistics first, to get the base line. Run from the SHOWPLAN comment for the Query Plan (the query is NOT run), and from the STATISTICS comment for the number of scans and the amount of I/O (the query IS run, so this will be the actual run-time for the query).

SET STATISTICS IO OFF SET STATISTICS TIME OFF SET STATISTICS PROFILE OFF SET STATISTICS IO ON SET STATISTICS TIME ON The wildcard on will be slow, it would be helped if there was an index on AND the selectivity of is reasonably good (it you have thousands of rows for each value, for THAT table, then SQL may choose not to use the index and table-scan every row instead - SET SHOWPLAN_TEXT ON Select something from Requestbody where where id = xxx and Requestbody like '%somesearch%'

0 kommentar(er)

0 kommentar(er)